Introduction: Artificial intelligence adoption is now mainstream – 72% of businesses have adopted AI in at least one function. CIOs and procurement leaders face a pivotal choice in deploying AI solutions: running AI on-premises in their own data centers or leveraging cloud-based AI services. Each model has distinct implications for infrastructure, data management, security, and contract terms. On-premises deployments can offer greater control and security, while cloud AI provides faster startup, scalability, and lower upfront cost. This article compares on-prem vs. cloud AI deployments, examines common enterprise AI scenarios and vendor platforms, and dives into key contract differences. We also discuss risks and hidden pitfalls in contract language and offer negotiation tips for each deployment type. The goal is an advisory, customer-centric perspective to help enterprise buyers make informed decisions and secure favorable contract terms.

On-Premises vs. Cloud: Key Practical Differences

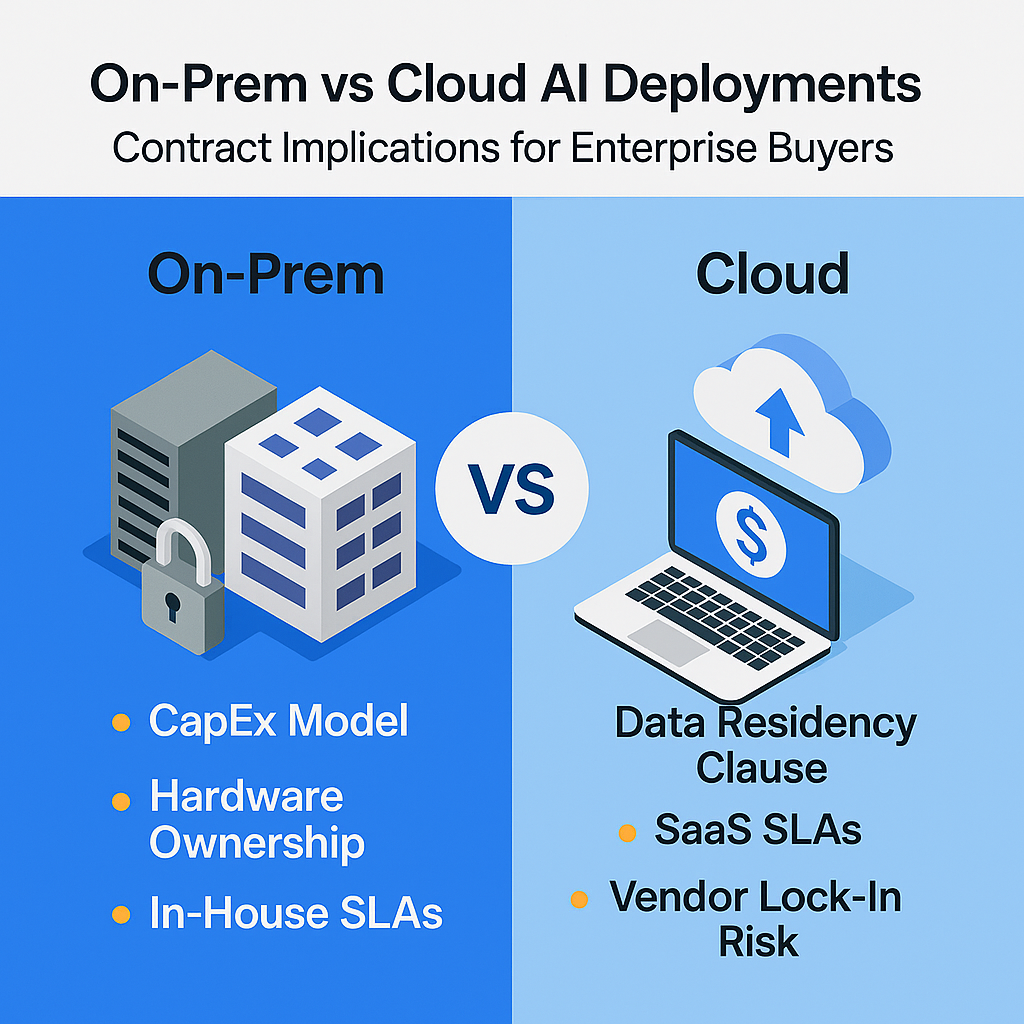

Before delving into contracts, it’s important to understand how on-prem and cloud AI deployments differ in practice. The table below summarizes critical differences in compute infrastructure, scalability, data control, security, support, and cost structure:

| Aspect | On-Premises AI Deployment | Cloud AI Deployment |

|---|---|---|

| Compute & Scalability | Elastic resources on demand (e.g., AWS, Azure instances); can scale up or down instantly, paying only for what is used. Large-scale experiments can be run without upfront hardware costs. | Cloud services include maintenance of infrastructure and often 24/7 operations by the provider. The vendor handles updates. Enterprises should ensure Service Level Agreements (SLAs) guarantee uptime and support response (often spelled out as part of the cloud contract). |

| Data Control & Residency | Security is largely outsourced to the cloud provider’s systems. Reputable cloud vendors invest heavily in security, but implementation shortfalls or less mature vendors can pose risks. Defining who bears responsibility for security incidents in the contract. | Security is largely outsourced to the cloud provider’s systems. Reputable cloud vendors invest heavily in security, but implementation shortfalls or less mature vendors can pose risks. Defining who bears responsibility for security incidents in the contract. |

| Security Posture | Security is largely outsourced to the cloud provider’s systems. Reputable cloud vendors invest heavily in security, but implementation shortfalls or less mature vendors can pose risks. Defining who bears responsibility for security incidents in the contract. | Security is managed in-house: the company sets and maintains all security measures, including provider’s access controls. Offers a high degree of control, but security is only as strong as the enterprise’s capabilities. |

| Support & Maintenance | Complete control over data location and access; data stays within company facilities. Ideal for sensitive or regulated data requiring local storage. | High CapEx upfront for hardware, licenses, and data center facilities. Perpetual or term licensing for software is common, plus ongoing maintenance fees (annual support ~15–20% of license cost). Hardware depreciation over the years can make on-prem cost-effective at scale, but under-utilization risk is high if AI workloads are sporadic. |

| Cost Model | OpEx subscription model – pay-as-you-go for usage or annual subscriptions. Little upfront cost, but operational expenses can accumulate. Cost scales with usage; It is easy to start small, but beware of hidden costs like data egress or per-API-call fees that can cause budget overruns. Multi-year cloud contracts often offer discounts, but long-term spend may exceed owning hardware if usage grows. | Internal or vendor-provided support for on-prem software/hardware. Typically requires a maintenance contract; support is often 9–5 with options for paid 24/7 support. The enterprise must apply patches and upgrades. |

On-premises AI typically provides maximum control. Organizations with strict regulatory requirements or highly sensitive data often favor on-prem solutions for greater control over data and enhanced security. However, this comes at the cost of significant investment in hardware, IT expertise, and ongoing maintenance. Cloud AI offers unmatched flexibility and scalability – ideal for enterprises that need to experiment, prototype quickly, or handle spiky workloads without huge upfront costs. The downside is potential vendor lock-in and less direct control. Cloud customers must remain vigilant about changes in service or pricing and ensure they have exit strategies if things go awry.

Common Enterprise AI Deployment Scenarios

Enterprise AI needs vary. Below are a few common deployment scenarios and how on-prem vs. cloud considerations often play out in each:

- Machine Learning Model Training: Training ML models (especially deep learning) is computationally intensive. Enterprises with constant, heavy training needs (e.g., vision or risk models retrained daily) might invest in on-prem GPU clusters or appliances (like NVIDIA DGX servers) to avoid recurring cloud bills. On-prem training keeps large datasets local for faster access. Conversely, organizations with occasional or bursty training jobs often leverage cloud platforms (AWS SageMaker, Azure Machine Learning, Google Vertex AI) to spin up GPU/TPU compute on demand. The cloud approach avoids idle hardware when experiments conclude, and simplifies accessing specialized hardware. Hybrid training is also common – e.g., initial development on cloud, then moving refined training in-house for cost or compliance reasons.

- AI-Powered Data Analytics: Many enterprises use AI/ML to derive insights from big data (customer analytics, predictive maintenance, etc.). If data is already aggregated in cloud data lakes or warehouses, running AI analytics in the cloud (e.g., using Databricks on AWS/Azure, or Google BigQuery ML) can minimize data movement. For data that resides in on-prem databases or must stay on-prem for compliance, companies may deploy AI analytics tools locally (for example, using IBM Watson Studio or SAS Viya on-premises) so that sensitive data never leaves their controlled environment. Data gravity is a deciding factor: AI often runs where the bulk of data lives.

- Large Language Models (LLMs) and Generative AI: LLMs (e.g, GPT-style models) present a special case. Using a public cloud LLM service (OpenAI API via Azure OpenAI, Google’s PaLM on Vertex AI, etc.) offers immediate capability but raises data privacy questions – enterprise inputs could be used to improvetthe provider’s model unless contracts forbid it. Some companies deploy LLMs on-prem (using open-source models fine-tuned on their data) to ensure queries and proprietary data never leave their premises. On-prem LLM deployment requires massive computing and expertise, so many start with the cloud for experimentation and only bring models in-house if long-term cost or privacy demands justify it. Hybrid approaches are emerging: e.g., running smaller generative models at the edge or on-prem for data containing PII, while using cloud AI for non-sensitive tasks.

- Computer Vision and Edge AI: AI vision systems in manufacturing, retail, or healthcare may need real-time processing with high privacy. On-premises deployment (or edge devices on-prem) is common for video analytics on factory floors or stores – this minimizes latency and keeps video data local for privacy. NVIDIA’s edge AI hardware or Azure Stack Edge appliances are examples of enabling on-prem vision inference. In contrast, aggregating images/videos to the cloud for analysis (using services like AWS Rekognition or Google Vision API) provides unlimited processing power and easier model updates. Still, it can raise bandwidth costs and privacy issues. Often, enterprises process sensitive visual data on-prem, and use cloud AI for less sensitive, aggregated insights.

Vendor and Platform Landscape

Multiple vendors provide AI platforms suited to one model or the other (and some offer both). Key players include:

- IBM Watson and Cloud Pak for Data: IBM offers Watson AI services that can run on IBM’s cloud or be containerized on-prem via Cloud Pak for Data. This hybrid approach caters to enterprises needing Watson’s NLP, ML, or Watson Assistant chatbot capabilities in a private environment. IBM’s on-prem solutions appeal to regulated industries that value Watson’s AI capabilities but must keep data in-house.

- NVIDIA (Hardware and Frameworks): NVIDIA dominates AI hardware with GPUs. Enterprises opting for on-prem AI often use NVIDIA-based infrastructure – from individual GPUs in servers to dedicated NVIDIA DGX systems for AI. NVIDIA also provides an AI Enterprise software stack and pre-trained models (through the NVIDIA NGC catalog) that can be used in private data centers. In the cloud, all major providers offer NVIDIA GPU instances; NVIDIA partners with cloud platforms (e.g., Azure’s ND-series VMs, AWS P-family instances) to deliver GPU acceleration as a service.

- Databricks: Databricks is a unified data and AI platform that originated in the cloud (available on AWS, Azure, Google Cloud). It’ss commonly used with Apache Spark for big data analytics, ETL, and ML at Scalk. While not a traditional on-prem product, Databricks can be deployed to a customer’s virtual private cloud and integrates with on-prem data sources. Enterprises often use Databricks to build ML pipelines in the cloud adjacent to their data lakes. Alternatives like Cloudera Machine Learning or Open Data Analytics Platform (ODAP) can be deployed on local clusters for those needing on-premises big-data AI.

- AWS, Microsoft Azure, and Google Cloud AI Services: The”big three” cloud providers have extensive AI offerings. AWS’s AI stack ranges from AI infrastructure (EC2, GPU instances) to managed ML services (SageMaker) and pre-built AI APIs (Amazon Rekognition, Comprehend, etc.). Microsoft Azure offers Azure Machine Learning, Cognitive Services, and an integration of OpenAI’s models. Google Cloud provides Vertex AI for an end-to-end ML workflow and generative AI APIs. These cloud platforms excel in breadth of services and scalability – enterprises can pick from training their models to leveraging advanced pre-trained models, all under a cloud subscription. The trade-off is that solutions are tied to eCloud’s ecosystem and pricing; negotiating enterprise agreements with these providers is key to managing costs and rights.

Each vendor/platform comes with its contract model. For example, SaaS AI services (like an AI analytics SaaS or an LLM API) will have cloud service agreements, while purchasing an on-prem AI software or hardware appliance involves license or purchase agreements. We’ll explore how these contracts differ.

Key Differences in Contract Terms (On-Prem vs Cloud)

When evaluating AI deployment models, enterprise buyers must scrutinize contract terms closely. Some of the biggest differences between on-premises agreements and cloud service agreements include:

- Data Ownership & Usage Rights: In on-prem deployment, there’s no ambiguity that the enterprise owns and controls its data – the data never leaves your systems. Cloud contracts must explicitly affirm that the customer retains ownership of all data uploaded. Importantly, negotiate restrictions on providers’ use of your data: for instance, no secondary use for training or analytics without consent. Cloud vendors often include language allowing aggregated or anonymized data use; enterprises should limit this. Recent trends driven by AI concerns show businesses demanding guarantees that their cloud provider will not use their data to train AI models without permission. In summary, on-prem = full data control by default, whereas cloud = ensures contractually that your data is yours and is only used to deliver the service.

- Licensing Model (Perpetual vs Subscription): On-prem software is usually sold as a license, often a perpetual license with ongoing support fees, or a term license for a set duration. The contract grants the company rights to install and use the software (often limited by several users, servers, or CPUs). The vendor’s obligation largely ends after delivery, except for support. Cloud AI services use a subscription model – you pay for access, but do not own the software. The vendor’s obligation is continuous (they must keep the service running each month). This difference affects many terms: for on-prem, you might negotiate volume discounts and usage flexibility (e.g, the right to deploy on multiple machines or in DR sites), whereas for cloud, you focus on service terms and recurring fees. Also, cloud pricing may be usage-based (pay per API call or hour of compute), so ensure the pricing model is clear and aligns with your usage patterns.

- Service Level Agreements (SLAs): Cloud contracts typically include uptime, performance, and support response SLAs. For example, a provider might guarantee 99.9% uptime and offer service credits if availability drops below that. These SLAs (and remedies) should be carefully reviewed and negotiated, especially for mission-critical AI workloads where downtime could be costly. On-prem software agreements, in contrast, usually do not have uptime SLAs (since you run the software yourself). Instead, on-prem deals may have support SLAs (e.g., vendor will respond to a Severity-1 issue within 1 hour). Ensure any support agreement for on-prem AI includes appropriate response times and perhaps penalties or credits if the vendor fails to meet them. With cloud AI, consider including performance metrics (latency, throughput) if relevant, as well asd monitoring/reporting rights so you can verify SLA compliance.

- Support & Maintenance: For an on-premises add-on annual contract, it’s important to clarify support scope (e.g., 24/7 vs business hours, on-site support availability, number of support contacts, etc.) and what maintenance includes (patches, minor upgrades, major upgrades). Often, major version upgrades might require new licenses or fees – negotiate discounts or rights to upgrade if possible. In cloud models, basic maintenance is what you’re paying for, but premium support (dedicated technical account managers, faster response) may cost extra. One key difference: cloud vendors typically handle all updates/upgrades continuously, so include terms about advance notice of significant changes or deprecations. On-prem contracts might also include end-of-life commitments (e.g., vendor will support the software version for X years). In any case, ensure you know how long the AI system will be supported and what happens if the vendor drops support.

- Audit Rights and Compliance: On-prem software licenses often include the vendor’s right to audit customers’ usage to ensure they are not exceeding licensed counts or violating terms. These audit clauses should be scoped reasonably (e.g., audit no more than once a year with advance notice). In cloud service agreements, traditional license agreements aren’t needed (since you can’t use more than you pay for), but compliance and security audits become a factor. Enterprise customers may negotiate rights to review the vendor’s security audits or certifications (SOC 2 reports, ISO 27001 certificates, etc.), especially if regulatory compliance is at stake. You might not get to audit the provider directly, but you can require annual compliance reports or the right to ask about their controls. Also, ensure the contract includes providers’ obligation to comply with specific laws (HIPAA BAA for healthcare data, GDPR commitments for EU data, etc.) relevant to your industry.

- Termination and Exit Terms: The ability to terminate and migrate is very different between on-prem and cloud. With on-prem licenses, a perpetual license, you can use the software indefinitely – termination usually just means stopping support or a license term ending, and there’s no refund. Cloud contracts, however, should be scrutinized for exit terms: What happens to your data upon termination? Ensure the contract obligates the provider to return or destroy data at your instruction and in a usable format. Check if there are early termination fees or auto-renewal clauses – negotiate flexibility if possible (for example, the right to terminate for convenience with notice after an initial period, or lenient ramp-down terms). Avoid multi-year cloud commitments without an escape hatch, unless heavily compensated by discounts. Additionally, include an exit assistance clause, the vendor should reasonably assist in transitioning your data or models back to you or a new provider for critical AI systems. Cloud agreements may also allow termination without penalty if the vendor breaches SLA consistently – consider negotiating that as a protection.

- Intellectual Property and Deliverables: When contracting for AI solutions, clarify IP ownership of any customizations, models, or deliverables. In on-prem software deals, the software IP stays with the vendor, but any data models or configurations you create are yours. If the vendor is developing a custom AI model or implementing solutions for you (common in AI projects), ensure the contract states who owns the resulting model or code. In cloud AI services, also be cautious of any language around machine learning models – for example, if you upload training data and train a model using a cloud service, do you own the model? (Many cloud ML platforms say you do, but double-check.) Also, include language that the provider cannot use your trained models or derived insights for other purposes without permission. If the AI service is proprietary (e.g., a SaaS, analytics tool), you might negotiate rights to your configured instance or export. For generative AI specifically, consider an IP indemnity – if the service outputs infringing content or code, the vendor should indemnify the customer.

- Liability and Indemnification: Contracts for both models will limit vendors’ liability, but the context differs. On-prem software vendors often argue their liability ends with the software delivery – any consequential damages from how you use it are largely your risk. Cloud providers typically run critical infrastructure; their standard contracts often cap liability at a month’s fees or less. This can be a huge issue if an AI service failure or data breach causes substantial losses. Enterprise buyers should push back on liability caps that are too low for the risk involved and seek specific indemnities for data breaches or IP infringement. For on-prem, if the software processes sensitive data, ensure the vendor will indemnify you if the software (or included AI model) violates someone’s IP or privacy rights. Also, negotiate carve-outs to limitations of liability: e.g., if the AI provider causes a major data breach through negligence, they should accept higher liability. While many providers resist increasing caps, getting 2–3x annual fees or a custom cap for data breach can better align incentives. In both cases, check if the contract has mutual indemnities (you might indemnify the vendor for your misuse of their product – that’s fair and not too open-ended).

In summary, on-premise contracts focus on licensing terms, support, and ensuring you have the rights to use software as needed. Cloud providers focus on service commitments, data rights, and operational assurances. Neither model is inherently better from a contract standpoint – each simply shifts what needs to be negotiated.

Risks and Trade-offs in Contract Language

Both deployment models come with risks that should be addressed in contract negotiations. Key risks and trade-offs to watch for include:

- Hidden Costs and Budget Overrun: Cloud AI services can carry hidden or variable costs that surprise customers. Data egress fees (the cost to pull data out of the cloud) are a common culprit, as are charges for API calls, storage overages, or required support plans. Due to unexpected costs, businesses reported exceeding cloud budgets by 20% or more. Migration: Insist on pricing transparency and consider contract provisions to cap or fix certain costs. For example, negotiate volume discounts, commit to a spend to get lower unit costs, or include a clause that you must approve any usage that would incur significant overage fees. On-prem deployments avoid many usage-based fees, but have their hidden costs – e.g., electricity, city, cooling, IT staff time, hardware maintenance, and periodic upgrades. Procurement should do a total cost of ownership (TCO) analysis for on-prem solutions and ensure contracts for hardware include replacement parts and support, so those future costs are known.

- Vendor Lock-In and Portability: Both models can create lock-in, albeit in different ways. With cloud AI, proprietary services can make moving technically or financially difficult. For example, if you heavily use Cloud Sud’s custom ML APIs, rewriting that for another platform is costly. Additionally, getting your data out can be challenging; there have been cases where customers faced hefty extraction fees to retrieve their data after terminating a contract. To avoid this, negotiate strong data portability rights upfront: the provider should give your data (and preferably model artifacts, logs, etc.) back in a standard format, at no or minimal cost. Lock-in might come from dependence on a specific vendor’s hardware or software for on-prem. You’ve invested millions in an AI appliance or licensed platform, you’re less agile in switching to something else. Mitigate this by negotiating options such as transferability of licenses (to new platforms or cloud) or swap-out programs, and by favoring solutions built on open standards that you could maintain yourself if needed. Contract tip: In long-term deals, consider adding a review clause – e.g., after 2 years, you can evaluate new options or renegotiate if market prices drop, you’re not stuck with outdated pricing or tech.

- Compliance and Data Sovereignty: Enterprises in regulated sectors (finance, healthcare, government, etc.) must ensure that whichever model they choose meets compliance obligations. With cloud, this means carefully reviewing providers’ certifications and contract commitments. Does the provider sign a Business Associate Agreement for HIPAA? Will they commit to data residency (storing data in specified countries or centers)? Contracts should document any geographical restrictions for data storage and processing. It’s wise to require that cloud vendors maintain key security certifications (SOC 2 Type II, ISO 27001, FedRAMP if government data, etc.) and notify you if they lapse. Some enterprises negotiate the right to audit providers’ facilities or request audit reports to satisfy regulators. On-prem deployments put the compliance burden on you – you have full control over and full responsibility for enabling security. Ensure any on-prem software meets necessary standards (e.g., does the AI software log data access in a way that helps you with GDPR requests?). Also, privacy laws might affect data leaving your premises if you are using it. You’re using AI, include clauses about breach notification times and cooperation so you can meet legal duties in case of an incident.

- Performance and Availability Risks: Cloud AI performance can be subject to the provider’s environment and internet connectivity. If your application is latency-sensitive (say, high-frequency trading algorithms or real-time recommendations), a cloud outage or slowdown could be disastrous. Downtime in cloud services has caused major losses for enterprises that were not prepared. 30% of businesses reported significant losses from cloud outages in 2023. To mitigate, ensure the SLA covers not just uptime but also performance metrics where possible, and have an up-to-date disaster recovery plan. The contract should cover disaster recovery and continuity – e.g., does the provider offer failover to another region? How quickly do they aim to recover? Performance issues might arise for on-prem if you under-provision hardware or there’s an internal failure. While those are under your control, you might negotiate with on-prem vendors for performance guarantees on their hardware (e.g., if an A, I appliances don’t meet the throughput benchmarks promised, you have remedies). Generally, TK is managed by conducting thorough testing (proof-of-concept trials) and including expectations in the contract or statement of work.

- Future Technology Changes: AI technology evolves quickly. What if a new AI model or platform emerges that you want to adopt? Cloud services can roll out new features continuously, which is a benefit, but also a risk if they change something in a way that breaks your use case. Ensure the cloud contract has change management provisions: advance notice of major changes, deprecation policy (e.g., old API, versions supported for X months after new release), etc. In one-pr Watch commitments that lock you into a specific technology generation. You may want a clause that allows you to swap to a different product or cloud service if the on-prem solution falls behind (perhaps with vendor’s help or credit). It’s also wise to negotiate pricing protections for new tech – e.g., if tendt leases a faster hardware model next year, can you trade in or upgrade at a discount?

In essence, enterprise buyers should approach AI contracts with a healthy skepticism and a focus on” if” scenarios. What if we want to exit? What if costs grow too fast? What if a breach happens? Thinking through these scenarios and addressing them in contract language is critical to avoid unpleasant surprises.

Negotiation License for On-Premises AI Contracts

When negotiating contracts for on-prem AI software or equipment, consider the following tips to protect the organization’s interests:

- Scope the License Broadly: Ensure the license terms cover all intended uses. For example, if you plan to use the software in a clustered environment or multiple locations, verify that the contract permits it. It’s often better to license per user or enterprise-wide rather than per-device if possible (especially for AI software that might run on many servers). Negotiate license metrics so won’t hinder licenser deployment – e.g., unlimited internal use, or a high cap on data volume or users to accommodate growth.

- Negotiate Maintenance and Support Upfront: Vendors may treat the license and support as separate sales; push for a combined deal with rate locks. For instance, if you buy a perpetual license, negotiate the annual maintenance fee percentage and cap any yearly increase. Define support SLAs: e.g., 24/7 su, support if your AI runs in production 24/7, with specified response times. Make sure the contract spells out how you receive updates/patches and for how long. If the AI product roadmap includes future versions, try to include upgrade rights, “like “entitled to version upgrades for X years, or at least discounted upgrades.

- Limit Audit Intrusions: If the vendor insists on an audit clause (common in on-prem licenses to ensure that usage does not exceed the license or share the software), negotiate it to be reasonable. Include requirements like: audits no more than once a year, at your premises during business hours, with prior written notice (e.g., 30 days), and the auditor must comply with your security policies. Also, clarify that any discovered overuse will be handled by purchasing additional licenses at contract rates (no punitive penalties). Tight audit terms prevent vendors from disruptive fishing expeditions in your environment.

- Include Performance and Acceptance Criteria: Consider adding acceptance testing clauses if using expensive AI hardware or a complex software solution. For example, define key performance benchmarks (throughput, inference latency, etc.) that the system must meet in your environment. If it fails, you can remediate it or even return the product. This is especially important if the purchase is a first-of-its-kind deployment. While many vendors will default to standard warranty terms, you can negotiate custom performance commitments in the contract or SOW.

- Ensure Data and Model Security: Even though on-prem means data stays with you, vendors might need remote access for support or may collect telemetry. Include clauses to control this – e.g., vendor, will not extract any of your data from the system except as necessary for support and with notice. If the AI software phones home or updates automatically, ensure you know what information is transmitted. Also bind he vendor to confidentiality for any data they may see during support. If the system involves a pretrained model provided by the vendor (say, a Vision Ion model included with the software), clarify that the output and any fine-tuned versions of the model are yours to use, and that they won’t reuse your fine-tuning data.

- Plan for End-of-Life and Transition: Get commitments regarding how long the software/hardware will be supported. You’re buying an AI application .What’ss the hardware warranty period? Can you purchase extended support after the initial period? Also, negotiate what happens if you choose to discontinue use. For example, if it’s a subscription license on-prem (some vendors do term licenses on-prem), can you extend for a short period to transition off? While on-prem don’t don’t usually in”lude “exit assi”tance” like cloud, you might want provisions for getting continued access to your data in standard formats or getting an escrow of source code if the vendor goes under (this is more common in critical systems: source code escrow can be negotiatyou’reyou’re not left stranded if the vendor fails to support the product).

- Total Cost Clarity: Insist that components needed are identified in the contract to avoid future cost surprises. Does the software require separate modules or third-party licenses (e.g., database, OS, or AI framework licensing)? Make the vendor’s responsibility to spell that out. For hardware, ensure the price includes all necessary components (some systems might sell base units and charge extra for memory/GPU upgrades – clarify upfront). Also, negotiate a pricing hold for additional units or licenses for some time. If you might expand next year, try to lock in year’s pricing or a cap on increase so you don’t face a huge price hike later, and you’re tied to the solution.

Negotiation Tips for Cloud AI Contracts

Cloud AI contracts (SaaS, PaaS, or cloud infrastructure deals) require a slightly different focus. Here are specific tips for negotiating cloud AI agreements:

- Secure Strong SLAs with Reme.Don’tt accept just the ERP uptime SLA. If your AI service is critical, negotiate for higher uptime (e.g., 99.9% or 99.99%, depending on need) and meaningful remedies. Service credits for downtime are standard, but you can also negotiate clauses like the right to terminate if SLA breaches happen for several consecutive months. Ensure the SLA covers not just uptime but also critical support response times. For example, if using a cloud ML platform for a trading system, you may need a <1 hour response on high-severity issues – get that in the contract (perhaps via a premium support plan).

- Data Protection, Privacy, and Residency: As noted, explicitly retain data ownership and forbid its use for anything but providing the service. Add language requiring the provider to comply with privacy laws (GDPR, CCPA, etc.) and promptly notify you of any data breach affecting your data (cloud vendors should agree to immediate or 24-hour notification for breadon’t don’t settle for vague wording). If the location of data matters, include a data residency clause (“Customer’s data will be stored and processed only in data centers located” in __”). If the AI service involves transferring data to third-party AI models (for example, a service that OpenAI’s API is used behind the scenes), ensure the contract discloses that and extends data protection commitments to those sub-processors. You may also negotiate the right to annual security audits or reports to keep the provider accountable.

- Cost Controls and Transparency: Cloud costs can spiral with usage. Negotiate pricing terms that give you predictability: for instance, use a discount or a volume tier that fits your forecast. Try to cap variable fees – e.g., cap the theor API calls beyond a certain volume, or get discounted overage rates. Insist on the abimonitoring in real time (to spot cost spikes) and perhaps a contractual clause that the provider will alert you to or require approval for if usage charges exceed a threshold. If possible, negotiate a rate lock for renewals or a cap (e.g., no more than 5% increase in subscription fees year-over-year) to avoid lock-in price gouging. Everything about pricing and billing should be documented – including what happens if you exceed quotas (do they auto-upgrade you and charge more, or stop the service? Prefer a non-automatic upgrade).

- Vendor Responsibilities in AI Outcomes: If using a higher-level AI service (like an AI-powered analytics or an ML model provided as a service), consider what happens if it produces errors or problematic results. While we won’t usually guarantee outcomes, you can push for commitments around quality and fitness for purpose to some extent. At minimum, there’s an escalation path if the AI outputs are wrong due to a platform issue (for example, if the service is supposed to detect fraud and it misses obvious patterns due to a bug, the vendor should fix it promptly). Also, clarify IP ownership of outputs – usually, the outputs from running your data through their AI are yours, but make sure the contract says so, especially if those outputs are models or insights you will use internally.

- Exit Strategy – Data and Model Portability: One of the most crucial cloud negotiation points is planning for exit on day 1. Specify how you can get your data out: the format, the assistance provided, and the timeframe. For instance, negotiate that upon contract termination or expiry, the provider will assist for X days in exporting your data (and maybe model artifacts) to you, and they’ll wipe your data from their systems afterwards (except any backups which should be secured until purged). Try to avoid or minimize any termination fees. If you must sign a long-term contract for discount reasons, at least include a clause that allows termination for material breach or if a change in law/regulation makes cloud use untenable (this can be important in regulated sectors). It’s also worth negotiating an option for a transition period: e.g., you can extend the service for a few months at the end of term at the same rate, if you need time to switch – otherwise you mig, ht be forced into renewal because your data is still there.

- Liability and Indemnity Carve-Outs: As discussed, cloud providers often limit liability heavily. Push for higher limits or carve-outs. For example, you might get a clause that says for data breach damages or confidentiality breaches, vendor’s liability will be actual damages up to a higher cap (or even unlimited in cases of gross negligence). Also,, ensre the contract has an intellectual property indemnification – if the AI service or any provided model infringes a party’sarty’s IP, the vendor should defend and cover you. Similarly, if the service involves any open-source components, the vendor should ensure they are properly licensed and indemnify you against open-source license disputes. These protections are especially important in AI, where new IP issues (like training data copyrights) emerge.

- Compliance and Verification: If your company has to answer to auditors or regulators, write in rights that help you do that. For instance, a large bank using a cloud AI service might negotiate the right to perform an on-site audit of the cloud provider’s controls (or more commonly, the right for its regulators to do so). Even if providers push back, you can at least demand that regular compliance reports and certifications be provided. Additionally, include a clause that the provider will cooperate with any legal or regulatory inquiries related to the service (for example, if there’s an e-discovery or an investigation requiring data, they should assist under proper safeguards).

- Service Evolution and Vendor Lock-In Mitigation: Try to maintain leverage over time. One approach is negotiating a technology escrow or contingency for critical services – e.g., if the cloud provider ceases to offer the service or goes out of business, can they deposit the code in escrow or transfer the system on-prem to you as a last resort? This is not common with big providers, but with small ones, it is worth discussing. At min with smaller AI startupsimum, include a clause that if the vendor discontinues the service, you can terminate and get a refund for unused portions, and they must help with the transition. To combat lock-in, favor contracts that don’t penalize scaling down usage. Some cloud contracts commit you to a minimum spend – negotiate flexibility to reduce that if your needs change or if you partially move on-prem or to another cloud.

- Document Everything Promised: Cloud AI deals often move fast, and sales teams may promise capabilities or compliance that aren’t standard. Including a promise or an attached service description is important in the contract. If the vendor is vital, your data will only be stored in the region where we are, or we will retrain the model exclusively for you. Make sure those appear in the agreement. Verbal assurances mean little if the contract disclaims them. Also, ensure that any pilot or proof-of-concept results you expect to carry over are documented. For example, a pilot achieved 95% accuracy and is used in production; consider referencing that as a target or documenting it in meeting notes shared between parties. This creates accountability and a shared understanding, which can be enforceable if written at a service level or in an addendum.

By proactively negotiating these cloud terms, enterprises can significantly reduce the risk of unpleasant surprises and ensure the AI service aligns with their business needs and values from the outset.

Recommendations

In conclusion, CIOs and procurement teams should adopt a strategic approach when evaluating AI deployment models and negotiating contracts. Here are our key recommendations:

- Align Deployment Model with Business Priorities: Carefully assess the organization’s requirements for data sensitivity, scalability, and speed of innovation. If control and compliance are paramount, an on-premises or private cloud model may be worth the cost. If agility and quick scaling are top priorities, lean towards cloud – but weigh the hybrid approach (many enterprises blend both). Don’t choose cloud vs on-prem by hype; choose based on fit.

- Perform a Thorough Cost-Benefit Analysis: Look beyond direct costs. Calculate the full TCO of on-prem (including personnel and future upgrades) versus the long-term subscription costs of cloud (including potential growth in usage). Use this analysis in negotiations – for example, if cloud becomes more expensive over 3 years, use that to push for better pricing or concessions. Conversely, if on-prem has big upfront costs, ensure the business is ready for the CapEx and build a case for ROI (possibly by capitalizing the investment to show savings over time).

- Prioritize Data and Security in Contracts: Whichever model, lock down data ownership, privacy, and security obligations in writing. For cloud deals, data rights and security provisions are treated as deal-breakers. Require clarity on data use, breach notification, and compliance standards. For on-prem, the vendor must provide security patches and not introduce vulnerabilities. If the AI will handle critical or personal data, involve your security and legal teams early to set the baseline requirements a vendor must meet.

- Negotiate for Flexibility: Adaptability is crucial in the fast-evolving AI landscape. Build flexibility into the contract – e.g., modular, contract terms that let you add or remove capacity, change user counts, or swap technologies with minimal penalty. Avoid rigid long-term lock-ins unless heavily in your favor. Where possible, include review points (annual business reviews in cloud contracts can be used to renegotiate if usage or requirements have changed). Ensure you have an exit strategy on paper, even if you don’t expect to use it.

- Watch for Vendor Lock-In Signals: Be cautious with proprietary tools that don’t export data or models easily. During RFPs or POCs, ask vendors how you’d migrate away if needed. Favor solutions that support standard data formats and protocols. When negotiating, explicitly address lock-in concerns: for instance, add a clause for data export assistance or limit auto-renewal. The goal is to preserve your negotiating power over the life of the relationship.

- Leverage Competition in Negotiations: Use the competitive market to your advantage for both. Cloud for both on-prem and cloud providers often offer credits or discounts if they know you’re considering a rival. On-prem vendors might include extra modules or services at no cost to win a deal. Always solicit multiple bids or at least have a credible alternative in mind – it strengthens your position to get better terms on price, liability, and service levels.

- Engage Legal and Procurement Expertise: These contracts blend technical and legal complexity. Involve counsel who understands technology contracts (cloud agreements, in particular, regarding data and SLA). Don’t shy away from seeking outside expert review for large deals – the cost is minimal compared to the potential risk buried in a bad contract. Pay attention to jurisdiction and governing law too (especially for cloud contracts of non-local providers), as it can affect your rights in enforcement.

- Continuously Monitor and Manage: After signing, contract management is key. Assign someone to monitor the vendor’s performance against SLAs, track renewals and notice periods, and monitor usage vs. entitlements. If it’s a cloud service, use the reporting tools (or request custom reports) to ensure you get paid. If it’s on-prem, ensure you get what you need and that the vendor remains responsive. Begin renewal or true-up conversations well before deadlines to avoid last-minute pressure. Treat the contract as a living framework and enforce your rights throughout the term.

By following these recommendations, enterprise buyers can act as effective stewards of their organization’s interests, ensuring that the contractual foundation is solid, balanced, and aligned with strategic objectives, whether AI is deployed on-premises or in the cloud. With AI poised to drive competitive advantage, setting the right terms now will pay dividends in agility, risk mitigation, and value realization in the long run.